K8S Service的基本概念和使用方式及实现原理

描述

【作者】陈成,中国联通软件研究院容器云研发工程师,公共平台与架构研发事业部云计算研发组长,长期从事大规模基础平台建设相关工作,先后从事Mesos、KVM、K8S等研究,专注于容器云计算框架、集群调度、虚拟化等。

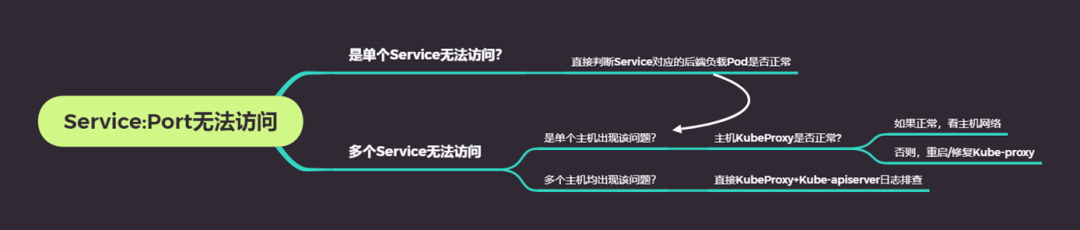

故事的开始,让我们先从一件生产故障说起。5月29日,内部某系统出现大规模访问Service故障,发现Pod容器内无法正常访问ServiceIP:Port,整个故障持续时间超过12h,相关运维支撑人员没有找到根本原因和解决办法。

经过复盘,我们发现,大家对于K8S Service的原理不够清晰,导致对问题的定位不能做得到快速准确,如果当时能够按照如下的思路去思考问题,排查过程不至于花费如此久的时间。

下面,我们就来细说一下Service在Kubernetes中的作用、使用方法及原理。

Service是一种暴露一组Pod网络的抽象方式,K8S Service提供了针对于一组Pod的负载均衡的暴露。通过这样的方式,可以避免不同的pod之间访问时需要知晓对应pod网络信息的痛苦。例如:前端->后端,由于前端POD IP随时变动,后端亦如此,如何处理前端POD和后端POD的通信,就需要Service这一抽象,来保证简单可靠。

Service的使用

1、典型服务配置方法

当配置了selector之后,Service Controller会自动查找匹配这个selector的pod,并且创建出一个同名的endpoint对象,负责具体service之后连接。

apiVersion: v1kind: Servicemetadata:name: my-servicespec:selector:app: MyAppports:protocol: TCPport: 80targetPort: 9376

2、配置没有selector的服务

没有selector的service不会出现Endpoint的信息,需要手工创建Endpoint绑定,Endpoint可以是内部的pod,也可以是外部的服务。

apiVersion: v1kind: Servicemetadata:name: my-servicespec:ports:protocol: TCPport: 80targetPort: 9376---apiVersion: v1kind: Endpointsmetadata:name: my-servicesubsets:addresses:ip: 192.0.2.42ports:port: 9376

Service的类型

1.CluserIP

kubectl expose pod nginx --type=CluserIP --port=80 --name=ng-svcapiVersion: v1kind: Servicemetadata:name: ng-svcnamespace: defaultspec:selector:name: nginxclusterIP: 11.254.0.2ports:name: httpport: 80protocol: TCPtargetPort: 1234sessionAffinity: Nonetype: ClusterIP

2.LoadBalance

3.NodePortapiVersion: v1kind: Servicemetadata:name: my-servicespec:selector:app: MyAppports:protocol: TCPport: 80targetPort: 9376clusterIP: 10.0.171.239type: LoadBalancerstatus:loadBalancer:ingress:ip: 192.0.2.127

4.ExternalName5.HeadlessapiVersion: v1kind: Servicemetadata:name: my-servicespec:type: NodePortselector:app: MyAppports:port: 80targetPort: 80nodePort: 30007

对定义了选择算符的无头服务,Endpoint 控制器在 API 中创建了 Endpoints 记录, 并且修改 DNS 配置返回 A 记录(IP 地址),通过这个地址直接到达 Service 的后端 Pod 上。apiVersion: v1kind: Servicemetadata:labels:run: curlname: my-headless-servicenamespace: defaultspec:clusterIP: Noneports:port: 80protocol: TCPtargetPort: 80selector:run: curltype: ClusterIP

# ping my-headless-servicePING my-headless-service (172.200.6.207): 56 data bytes64 bytes from 172.200.6.207: seq=0 ttl=64 time=0.040 ms64 bytes from 172.200.6.207: seq=1 ttl=64 time=0.063 ms

对没有定义选择算符的无头服务,Endpoint 控制器不会创建 Endpoints 记录。然而 DNS 系统会查找和配置,无论是:

-

对于 ExternalName 类型的服务,查找其 CNAME 记录

-

对所有其他类型的服务,查找与 Service 名称相同的任何 Endpoints 的记录

Service的实现方式

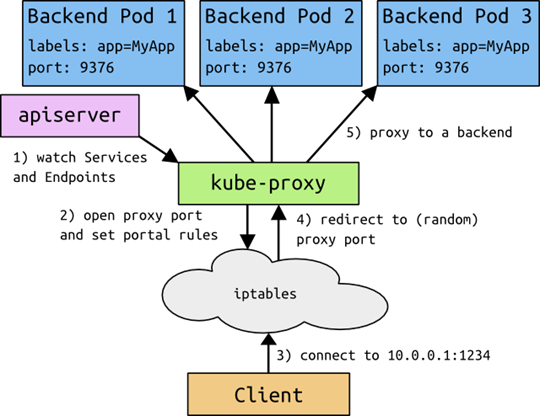

1.用户态代理访问

即:当对于每个Service,Kube-Proxy会在本地Node上打开一个随机选择的端口,连接到代理端口的请求,都会被代理转发给Pod。那么通过Iptables规则,捕获到达Service:Port的请求都会被转发到代理端口,代理端口重新转为对Pod的访问

这种方式的缺点是存在内核态转为用户态,再有用户态转发的两次转换,性能较差,一般不再使用

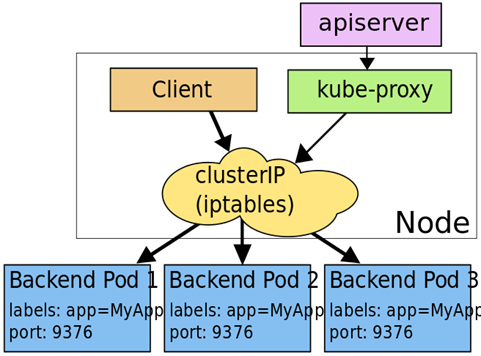

2.Iptables模式

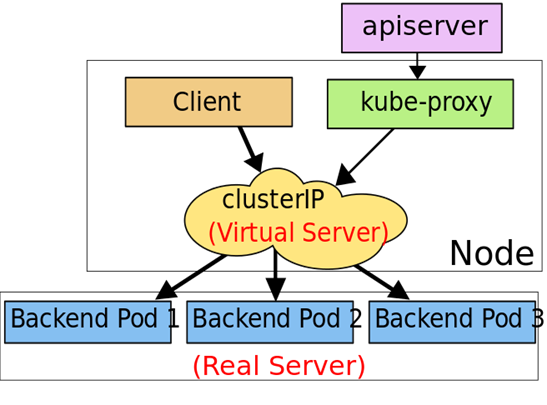

3.Ipvs模式

Service Iptables实现原理

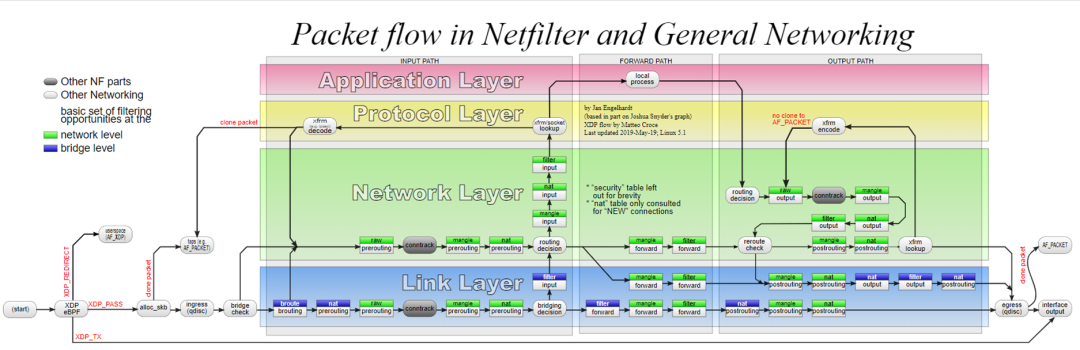

Iptables表和链及处理过程

Service的Traffic流量将会通过prerouting和output重定向到kube-service链

-A PREROUTING -m comment --comment "kubernetes service portals" -j KUBE-SERVICES-A POSTROUTING -m comment --comment "kubernetes postrouting rules" -j KUBE-POSTROUTING-A OUTPUT -m comment --comment "kubernetes service portals" -j KUBE-SERVICES

-

KUBE-SERVICES->KUBE-SVC-XXXXXXXXXXXXXXXX->KUBE-SEP-XXXXXXXXXXXXXXXX represents a ClusterIP service

-

KUBE-NODEPORTS->KUBE-SVC-XXXXXXXXXXXXXXXX->KUBE-SEP-XXXXXXXXXXXXXXXX represents a NodePort service

几种不同类型的Service在Kube-Proxy启用Iptables模式下上的表现

-

ClusterIP

-A KUBE-SERVICES ! -s 172.200.0.0/16 -d 10.100.160.92/32 -p tcp -m comment --comment "default/ccs-gateway-clusterip:http cluster IP" -m tcp --dport 30080 -j KUBE-MARK-MASQ-A KUBE-SERVICES -d 10.100.160.92/32 -p tcp -m comment --comment "default/ccs-gateway-clusterip:http cluster IP" -m tcp --dport 30080 -j KUBE-SVC-76GERFBRR2RGHNBJ-A KUBE-SVC-76GERFBRR2RGHNBJ -m comment --comment "default/ccs-gateway-clusterip:http" -m statistic --mode random --probability 0.33333333349 -j KUBE-SEP-GBVECAZBIC3ZKMXB-A KUBE-SVC-76GERFBRR2RGHNBJ -m comment --comment "default/ccs-gateway-clusterip:http" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-PVCYYXEU44D3IMGK-A KUBE-SVC-76GERFBRR2RGHNBJ -m comment --comment "default/ccs-gateway-clusterip:http" -j KUBE-SEP-JECGZLHE32MEARRX-A KUBE-SVC-CEZPIJSAUFW5MYPQ -m comment --comment "kubernetes-dashboard/kubernetes-dashboard" -j KUBE-SEP-QO6MV4HR5U56RP7M-A KUBE-SEP-GBVECAZBIC3ZKMXB -s 172.200.6.224/32 -m comment --comment "default/ccs-gateway-clusterip:http" -j KUBE-MARK-MASQ-A KUBE-SEP-GBVECAZBIC3ZKMXB -p tcp -m comment --comment "default/ccs-gateway-clusterip:http" -m tcp -j DNAT --to-destination 172.200.6.224:80...

-

NodePort

apiVersion: v1kind: Servicemetadata:labels:app: ccs-gatewayspec:clusterIP: 10.101.156.39externalTrafficPolicy: Clusterports:name: httpnodePort: 30081port: 30080protocol: TCPtargetPort: 80selector:app: ccs-gatewaysessionAffinity: Nonetype: NodePort

-A KUBE-NODEPORTS -p tcp -m comment --comment "default/ccs-gateway-service:http" -m tcp --dport 30081 -j KUBE-MARK-MASQ-A KUBE-NODEPORTS -p tcp -m comment --comment "default/ccs-gateway-service:http" -m tcp --dport 30081 -j KUBE-SVC-QYHRFFHL5VINYT2K############################-A KUBE-SVC-QYHRFFHL5VINYT2K -m comment --comment "default/ccs-gateway-service:http" -m statistic --mode random --probability 0.50000000000 -j KUBE-SEP-2NPKETIWKKVUXGCL-A KUBE-SVC-QYHRFFHL5VINYT2K -m comment --comment "default/ccs-gateway-service:http" -j KUBE-SEP-6O5FHQRN5IVNPW4Q##########################-A KUBE-SEP-2NPKETIWKKVUXGCL -s 172.200.6.224/32 -m comment --comment "default/ccs-gateway-service:http" -j KUBE-MARK-MASQ-A KUBE-SEP-2NPKETIWKKVUXGCL -p tcp -m comment --comment "default/ccs-gateway-service:http" -m tcp -j DNAT --to-destination 172.200.6.224:80#########################-A KUBE-SEP-6O5FHQRN5IVNPW4Q -s 172.200.6.225/32 -m comment --comment "default/ccs-gateway-service:http" -j KUBE-MARK-MASQ-A KUBE-SEP-6O5FHQRN5IVNPW4Q -p tcp -m comment --comment "default/ccs-gateway-service:http" -m tcp -j DNAT --to-destination 172.200.6.225:80

同时,可以看到Service所申请的端口38081被Kube-proxy所代理和监听

# netstat -ntlp | grep 30081tcp 0 00.0.0.0:30081 0.0.0.0:* LISTEN 3665705/kube-proxy

-

LoadBalancer

不带有Endpoint的Service

kubectl create svc clusterip fake-endpoint --tcp=80-A KUBE-SERVICES -d 10.101.117.0/32 -p tcp -m comment --comment "default/fake-endpoint:80 has no endpoints" -m tcp --dport 80 -j REJECT --reject-with icmp-port-unreachable

带有外部endpoint的Service

直接通过iptable规则转发到对应的外部ep地址

apiVersion: v1kind: Servicemetadata:labels:app: externalname: externalnamespace: defaultspec:ports:name: httpprotocol: TCPport: 80sessionAffinity: Nonetype: ClusterIP---apiVersion: v1kind: Endpointsmetadata:labels:app: externalname: externalnamespace: defaultsubsets:addresses:ip: 10.124.142.43ports:name: httpport: 80protocol: TCP

-A KUBE-SERVICES ! -s 172.200.0.0/16 -d 10.111.246.87/32 -p tcp -m comment --comment "default/external:http cluster IP" -m tcp --dport 80 -j KUBE-MARK-MASQ-A KUBE-SERVICES -d 10.111.246.87/32 -p tcp -m comment --comment "default/external:http cluster IP" -m tcp --dport 80 -j KUBE-SVC-LI2K5327B6J24KJ3-A KUBE-SEP-QTGIPNOYXN2CZGD5 -s 10.124.142.43/32 -m comment --comment "default/external:http" -j KUBE-MARK-MASQ-A KUBE-SEP-QTGIPNOYXN2CZGD5 -p tcp -m comment --comment "default/external:http" -m tcp -j DNAT --to-destination 10.124.142.43:80

总结

-

ClusterIP类型,KubeProxy监听Service和Endpoint创建规则,采用DNAT将目标地址转换为Pod的ip和端口,当有多个ep时,按照策略进行转发,默认RR模式时,iptables采用:比如有4个实例,四条规则的概率分别为0.25, 0.33, 0.5和 1,按照顺序,一次匹配完成整个流量的分配。

-

NodePort类型,将会在上述ClusterIP模式之后,再加上Kube-Proxy的监听(为了确保其他服务不会占用该端口)和KUBE-NODEPORT的iptable规则

审核编辑:郭婷

-

什么是 K8S,如何使用 K8S2025-06-25 255

-

OpenStack与K8s结合的两种方案的详细介绍和比较2018-10-14 28200

-

Docker不香吗为什么还要用K8s2021-06-02 3941

-

简单说明k8s和Docker之间的关系2021-06-24 4000

-

K8S集群服务访问失败怎么办 K8S故障处理集锦2021-09-01 16948

-

K8S(kubernetes)学习指南2022-06-29 779

-

mysql部署在k8s上的实现方案2022-09-26 3101

-

k8s是什么意思?kubeadm部署k8s集群(k8s部署)|PetaExpres2023-07-19 1551

-

什么是K3s和K8s?K3s和K8s有什么区别?2023-08-03 9097

-

k8s生态链包含哪些技术2023-08-07 1931

-

基于DPU与SmartNIC的K8s Service解决方案2024-09-02 1972

-

k8s云原生开发要求2024-10-24 1046

-

混合云部署k8s集群方法有哪些?2024-11-07 758

-

k8s和docker区别对比,哪个更强?2024-12-11 1120

-

如何通过Docker和K8S集群实现高效调用GPU2025-03-18 922

全部0条评论

快来发表一下你的评论吧 !