Python-爬虫开发01

描述

爬虫基本概念

爬虫的定义

- 网络爬虫(被称为 网页蜘蛛,网络机器人 ),就是 模拟客户端发送网络请求 ,接收请求响应,一种按照一定的规则,自动地抓取互联网信息的程序

- 一般来说,只要浏览器上能做的事情,爬虫都可以做

**爬虫的分类

**

- 通用爬虫 :通常指搜索引擎的爬虫

- 聚集爬虫 :针对特定网站或某些特定网页的爬虫

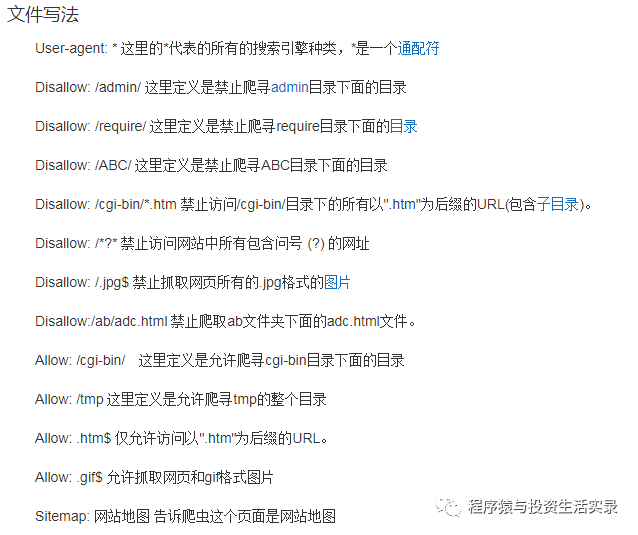

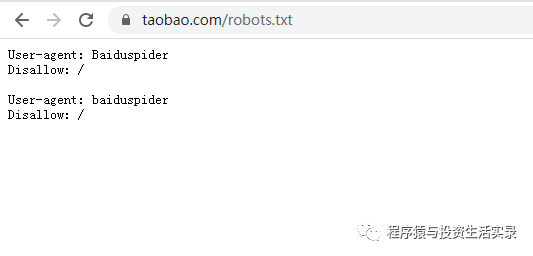

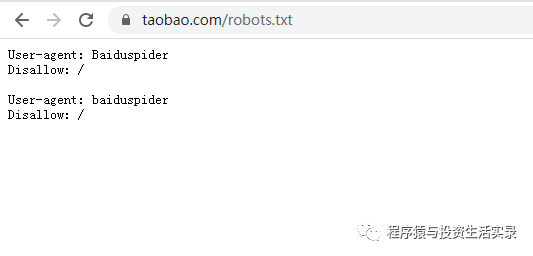

ROBOTS协议

- Robots协议: 也叫robots.txt(统一小写)是一种存放于网站根目录下的ASCII编码的文本文件,它通常告诉网络搜索引擎的漫游器(又称网络蜘蛛),此网站中的哪些内容是不应被搜索引擎的漫游器获取的,哪些是可以被漫游器获取的

- 如果将网站视为酒店里的一个房间,robots.txt就是主人在房间门口悬挂的“请勿打扰”或“欢迎打扫”的提示牌。这个文件告诉来访的搜索引擎哪些房间可以进入和参观,哪些房间因为存放贵重物品,或可能涉及住户及访客的隐私而不对搜索引擎开放。但robots.txt不是命令,也不是防火墙,如同守门人无法阻止窃贼等恶意闯入者

举例:

-

访问淘宝网的robots文件: https://www.taobao.com/robots.txt

* 很显然淘宝不允许百度的机器人访问其网站下其所有的目录

* 很显然淘宝不允许百度的机器人访问其网站下其所有的目录 -

如果允许所有的链接访问应该是:Allow: /

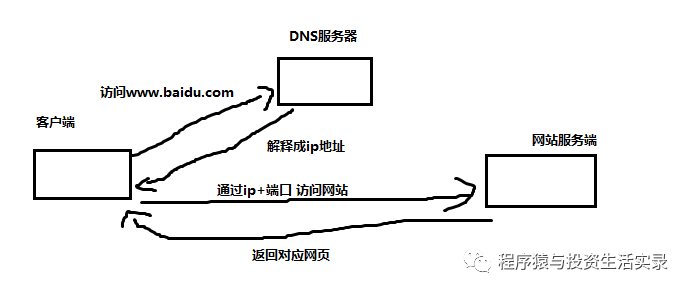

http和https的概念

-

http

- 超文本传输协议

- 默认端口号:80

-

https

- HTTP+SSL(安全套接字层)

- 默认端口号:443

-

**HTTPS比HTTP更安全,但是性能更低

**

URL的形式

- sheme://host[:port#]/path/.../[?query-string][#anchor]

- scheme:协议(例如:http、https、ftp)

- host:服务器ip地址/域名

- port:服务器端口号

- path:访问资源的路径

- query-string:请求的参数

- anchor:锚(跳转到网页的指定锚点位置)

- 例:http://item.jd.com/100008959687.html#product-detail

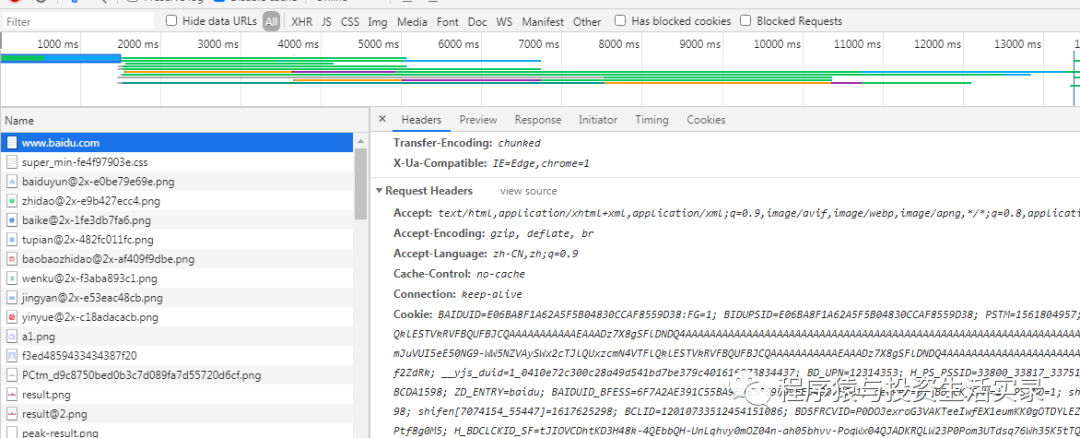

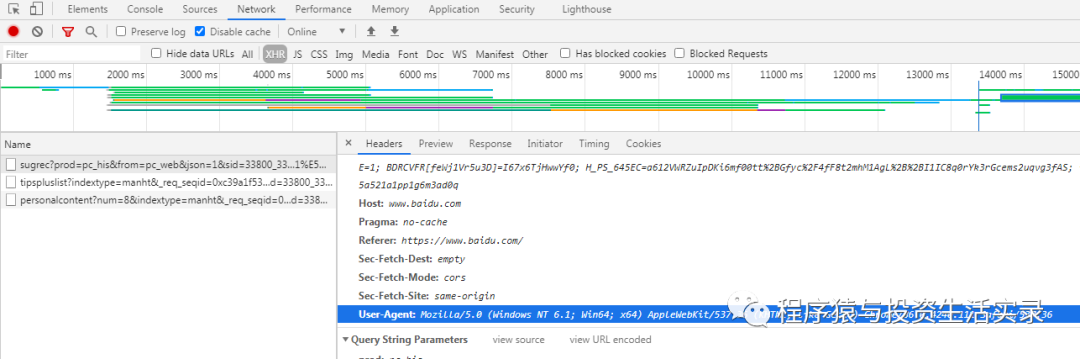

Http常见请求头

- Host (主机和端口号)

- Connection(链接类型)

- Upgrade-insecure-Requests(升级为https请求)

- User-Agent(浏览器名称)

- Accept(传输文件类型)

- Referer(页面跳转处)

- Accept-Encoding(文件编解码格式)

- Cookie

- x-requested-with:XMLHttpRequest(是Ajax异步请求)

Http常见响应码

- 200:成功

- 302:临时性重定向到新的url

- 404:请求的路径找不到

- 500:服务器内部错误

rquests模块

requests的官网

- https://docs.python-requests.org/zh_CN/latest/index.html

**示例:下载官网上的图片

**

import requests

url="https://docs.python-requests.org/zh_CN/latest/_static/requests-sidebar.png"

response=requests.get(url)

# 响应状态码为200,表示请求成功

if response.status_code==200:

# 生成图片

with open("aa.png","wb") as f:

f.write(response.content)

response.text 和 response.content 的区别

- response.text

- 类型:str

- 解码类型:根据http头部对响应的编码作出有根据的推测,推测的文本编码

- 修改编码的方法:response.encoding='gbk'

- response.content

- 类型:bytes

- 解码类型:没有指定

- 修改编码的方法:response.content.decode('utf-8')

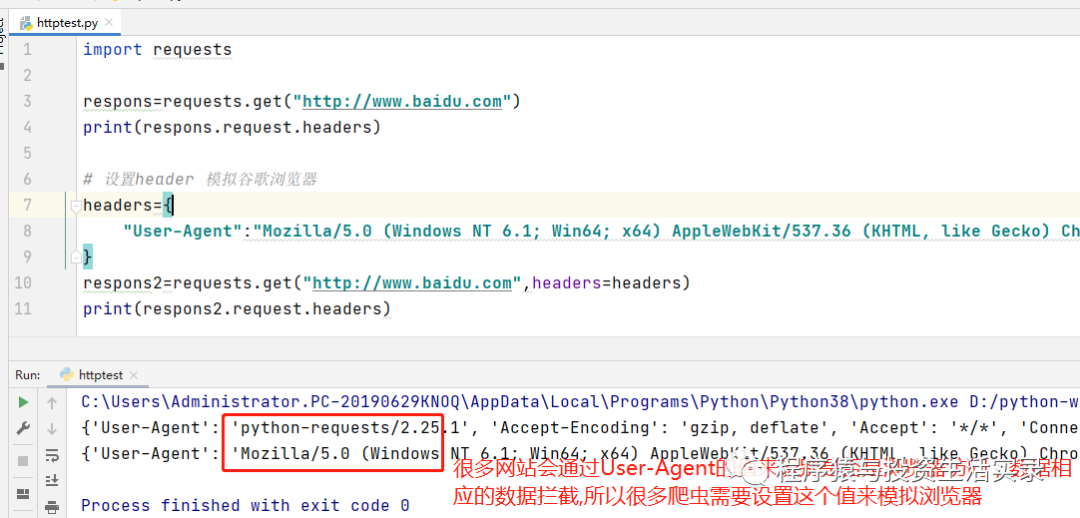

**requests请求带header

**

- 主要是模拟浏览器,欺骗服务器,获取和浏览器一致的内容

- header的数据结构是 字典

示例

import requests

respons=requests.get("http://www.baidu.com")

print(respons.request.headers)

# 设置header 模拟谷歌浏览器

headers={

"User-Agent":"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"

}

respons2=requests.get("http://www.baidu.com",headers=headers)

print(respons2.request.headers)

获取User-Agent的值

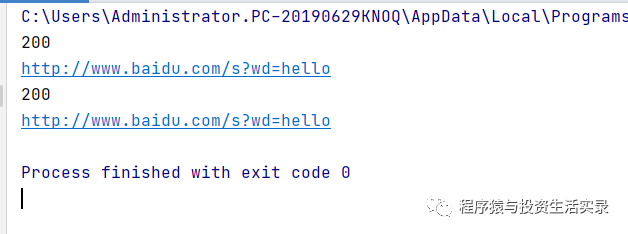

带参数的requests请求

import requests

url="http://www.baidu.com/s?"

# 添加参数

params={

"wd":"hello"

}

# 方式一

respons=requests.get(url,params=params)

# 方式二

respons2=requests.get("http://www.baidu.com/s?wd={}".format("hello"))

print(respons.status_code)

print(respons.request.url)

print(respons2.status_code)

print(respons2.request.url)

requests发送post请求

- 语法

- response=requests.post("http://www.baidu.com/",data=data,headers=headers)

- data的数据结构是 字典

- 示例

import requests

url="https://www.jisilu.cn/data/cbnew/cb_list/?___jsl=LST___t=1617719326771"

headers={

"User-Agent":"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"

}

params={

"curr_iss_amt": 20

}

respons=requests.post(url,data=params,headers=headers)

print(respons.content.decode())

requests使用代理方式

- 使用代理的原因

- 让服务器以为不是同一个客户端请求

- 防止我们的真实地址被泄露,防止被追究

- 语法

- requetst.get("http://www.baidu.com",proxies=proxies)

- proxies的数据结构 字典

- proxies={ "http":"http://12.33.34.54:8374","https":"https://12.33.34.54:8374" }

- 示例

import requests

url="http://www.baidu.com"

headers={

"User-Agent":"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"

}

proxies={

"http": "175.42.158.211:9999"

}

respons=requests.post(url,proxies=proxies)

print(respons.status_code)

**cookie和session的区别

**

- cookie数据存放在客户的浏览器上,session数据放在服务器上

- cookie不是很安全,别人可以分析存放在本地的cookie并进行cookie欺骗

- session会在一定时间内保存在服务器上。当访问增多会比较占用服务器性能

- 单个cookie保存的数据不能超过4k,很多浏览器都限制一个站点最多保存20个cookie

爬虫处理cookie和session

- 带上cookie、session的好处

- 能够请求到登录之后的页面

- 带上cookie、session的坏处

- 一套cookie和session往往和一个用户对应,请求太快,请求次数太多,容易被服务器识别为爬虫

- 如果不需要cookie的时候尽量不去使用cookie

**requests 处理cookies、session请求

**

- reqeusts 提供了一个session类,来实现客户端和服务端的会话保持

- 使用方法

- 实例化一个session对象

- 让session发送get或者post请求

- 语法

- session=requests.session()

- response=session.get(url,headers)

- 示例

import requests

headers={

"User-Agent":"Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"

}

data={

"email":"用户名",

"password":"密码"

}

session=requests.session()

# session发送post请求,cookie保存在其中

session.post("http://www.renren.com/PLogin.do",data=data,headers=headers)

# 使用session请求登录后才能访问的页面

r=session.get("http://www.renren.com/976564425",headers=headers)

print(r.content.decode())

声明:本文内容及配图由入驻作者撰写或者入驻合作网站授权转载。文章观点仅代表作者本人,不代表电子发烧友网立场。文章及其配图仅供工程师学习之用,如有内容侵权或者其他违规问题,请联系本站处理。

举报投诉

-

如何解决Python爬虫中文乱码问题?Python爬虫中文乱码的解决方法2024-01-12 3587

-

利用Python编写简单网络爬虫实例2023-02-24 729

-

python网络爬虫概述2022-03-21 3257

-

Python爬虫简介与软件配置2022-01-11 1440

-

0基础入门Python爬虫实战课2021-07-25 2375

-

用Python写网络爬虫2021-06-01 872

-

Python爬虫:使用哪种协议的代理IP最佳?2020-06-28 2345

-

python爬虫框架有哪些2019-03-22 7312

-

python为什么叫爬虫 python工资高还是java的高2019-02-19 821

-

python爬虫入门教程之python爬虫视频教程分布式爬虫打造搜索引擎2018-08-28 1906

-

Python爬虫与Web开发库盘点2018-05-10 2570

-

Python数据爬虫学习内容2018-05-09 2075

-

WebSpider——多个python爬虫项目下载2018-03-26 788

-

详细用Python写网络爬虫2017-09-07 894

全部0条评论

快来发表一下你的评论吧 !

* 很显然淘宝不允许百度的机器人访问其网站下其所有的目录

* 很显然淘宝不允许百度的机器人访问其网站下其所有的目录