鸿蒙OS开发实战:【穿戴应用】

电子说

描述

背景

写HarmonyOS穿戴应用研发,仅仅是为了一线研发人员提供少许的帮助。

在有些公司,可能因为业务的需要,所以要求研发人员一定要在华为手表穿戴上研发特定的功能,并且理所应当的认为这个开发成本就一个顺手的事情。

开发应用顺手的原因无非就几点

- 宣传 - HarmonyOS已经看似非常成熟

- 实践UI效果 - 研发人员在手机上也能写出绚丽的页面和功能

- 汇报 - 会写H5前端,就能立即开发HarmonyOS应用

穿戴研发核心注意点

- developer.harmonyos.com 网站中的文档,只能阅读3.0版本

- 智能穿戴 和 轻穿戴 应用均采用JS语言开发

- 智能穿戴 对应产品 - HUAWEI WATCH 3

- 轻穿戴 对应产品 - HUAWEI WATCH GT 2 Pro,HUAWEI WATCH GT 3

- 和你产品功能相关的API,切记真机验证

- JS调用Java的文档一定要阅读,因为穿戴设备上的JS API功能相对来说比较少

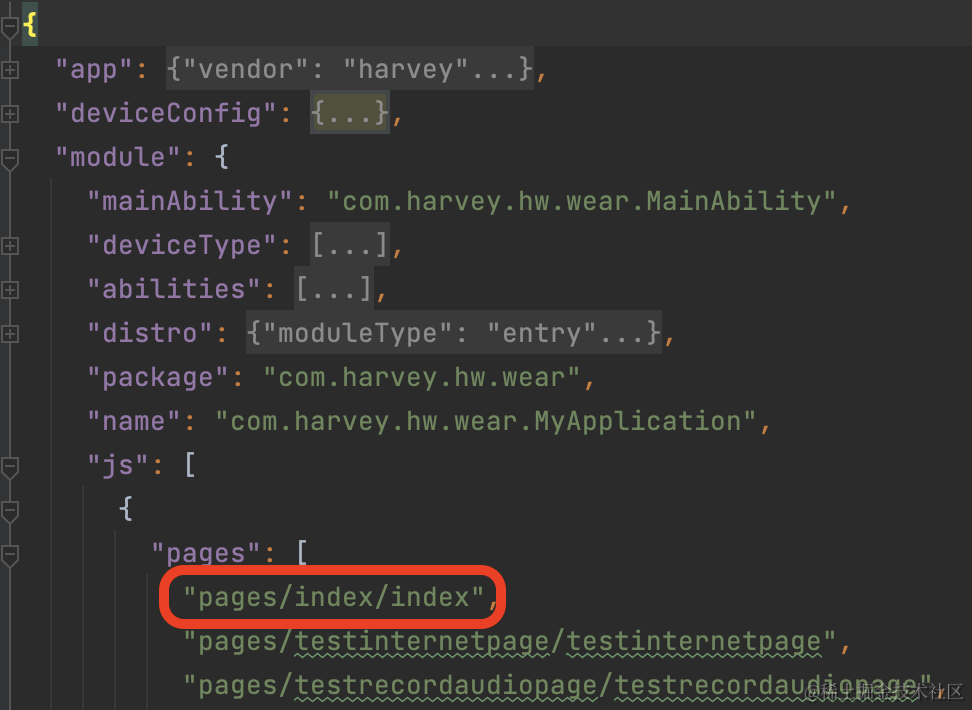

- JS语言开发的应用启动时的第一个页面是由 congfig.json 文件中的 module -> js -> pages 中的第一个位置文件决定的,比如如下截图中的红色框文件

重点-JS调用Java

场景

录音docs.qq.com/doc/DUmN4VVhBd3NxdExK前往。

效果图

为了快速演示效果,效果图来自IDE

代码说明

这里暂且认为你已阅读过3.0的开发文档,并且已经知晓所有的目录结构和开发语言

js代码包含三部分:1. hml页面 2. js逻辑处理 3. css页面样式

布局

testrecordaudiopage.js

import router from '@system.router';

import featureAbility from '@ohos.ability.featureAbility';

import serviceBridge from '../../generated/ServiceBridge.js';

var vm = null

export default {

data: {

title: "",

button_record: "录音",

show_button_record: true,

button_play: "播放",

show_button_play: true,

record: null

},

onInit() {

this.title = "JS 录音";

vm = this

},

onHide() {

if(this.record){

this.record.stopPlay()

this.record.stopRecord()

}

},

swipeEvent(e) {

if (e.direction == "right") {

router.back()

}

},

async audioRecorderDemo(type) {

this.record = new serviceBridge()

if (type === 'recordaudio') {

if(this.button_record === '录音'){

this.record.startRecord().then(value = > {

if(value.abilityResult == 3){

vm.button_record = '停止录音'

vm.show_button_play = false

}

});

} else {

this.record.stopRecord().then(value = > {

if(value.abilityResult == 1){

vm.button_record = '录音'

vm.show_button_play = true

}

});

}

} else if (type === 'playaudio') {

if(this.button_play === '播放'){

this.record.play().then(value = > {

if(value.abilityResult == 3){

vm.button_play = '停止播放'

vm.show_button_record = false

var playTimeStatus = setInterval(()= >{

this.record.isPlaying().then(value = > {

if(!value.abilityResult){

vm.button_play = '播放'

vm.show_button_record = true

clearInterval(playTimeStatus)

}

})

}, 1000)

}

})

} else {

this.record.stopPlay().then(value = > {

if(value.abilityResult == 1){

vm.button_play = '播放'

vm.show_button_record = true

}

})

}

}

}

}

复制

testrecordaudiopage.hml

< div class="container" onswipe="swipeEvent" >

< text class="title" >

{{ title }}

< /text >

< div class="audiobutton" >

< button class="buttons_record" show="{{show_button_record}}" onclick="audioRecorderDemo('recordaudio')" >{{button_record}}< /button >

< button class="buttons_play" show="{{show_button_play}}" onclick="audioRecorderDemo('playaudio')" >{{button_play}}< /button >

< /div >

< /div >

复制

testrecordaudiopage.css

.container {

width: 100%;

flex-direction: column;

background-color: black;

}

.title {

font-size: 25fp;

text-align: center;

width: 100%;

margin: 20px;

}

.audiobutton {

width: 100%;

display: flex;

flex-direction: column;

align-items: center;

}

.buttons_record {

width: 45%;

height: 15%;

font-size: 20fp;

text-color: white;

background-color: #1F71FF;

}

.buttons_play {

width: 45%;

height: 15%;

font-size: 20fp;

margin-top: 10vp;

text-color: white;

background-color: #1F71FF;

}

复制

Java API实现的功能

js/generated/ServiceBridge.js,注意这个文件是自动生成的.

关于自动生成js代码的指导,参见:docs.qq.com/doc/DUmN4VVhBd3NxdExK

// This file is automatically generated. Do not modify it!

const ABILITY_TYPE_EXTERNAL = 0;

const ABILITY_TYPE_INTERNAL = 1;

const ACTION_SYNC = 0;

const ACTION_ASYNC = 1;

const BUNDLE_NAME = 'com.harvey.hw.wear';

const ABILITY_NAME = 'com.harvey.hw.wear.ServiceBridgeStub';

......

const OPCODE_startRecord = 11;

const OPCODE_stopRecord = 12;

const OPCODE_stopPlay = 13;

const OPCODE_isPlaying = 14;

const OPCODE_play = 15;

const sendRequest = async (opcode, data) = > {

var action = {};

action.bundleName = BUNDLE_NAME;

action.abilityName = ABILITY_NAME;

action.messageCode = opcode;

action.data = data;

action.abilityType = ABILITY_TYPE_INTERNAL;

action.syncOption = ACTION_SYNC;

return FeatureAbility.callAbility(action);

}

class ServiceBridge {

......

async startRecord() {

if (arguments.length != 0) {

throw new Error("Method expected 0 arguments, got " + arguments.length);

}

let data = {};

const result = await sendRequest(OPCODE_startRecord, data);

return JSON.parse(result);

}

async stopRecord() {

if (arguments.length != 0) {

throw new Error("Method expected 0 arguments, got " + arguments.length);

}

let data = {};

const result = await sendRequest(OPCODE_stopRecord, data);

return JSON.parse(result);

}

async stopPlay() {

if (arguments.length != 0) {

throw new Error("Method expected 0 arguments, got " + arguments.length);

}

let data = {};

const result = await sendRequest(OPCODE_stopPlay, data);

return JSON.parse(result);

}

async isPlaying() {

if (arguments.length != 0) {

throw new Error("Method expected 0 arguments, got " + arguments.length);

}

let data = {};

const result = await sendRequest(OPCODE_isPlaying, data);

return JSON.parse(result);

}

async play() {

if (arguments.length != 0) {

throw new Error("Method expected 0 arguments, got " + arguments.length);

}

let data = {};

const result = await sendRequest(OPCODE_play, data);

return JSON.parse(result);

}

}

export default ServiceBridge;

复制

既然这个文件是自动生成的,那么继续看工程配置。

首先,在 工程根目录/entry/src/main/java 文件夹下的包名(文章举例使用:com.harvey.hw.wear)中创建一个名为ServiceBridge.java的文件

其次,配置初始化和声明ServiceBridge.js文件的注解。为什么注册路径文件是MainAbility, 因为初始化的代码是在MainAbility.java文件中

package com.harvey.hw.wear;

import com.harvey.hw.wear.bluetooth.BLEMain;

import ohos.annotation.f2pautogen.ContextInject;

import ohos.annotation.f2pautogen.InternalAbility;

import ohos.app.AbilityContext;

import ohos.bundle.IBundleManager;

import ohos.dcall.DistributedCallManager;

import ohos.hiviewdfx.HiLog;

import ohos.hiviewdfx.HiLogLabel;

import ohos.media.audio.*;

import java.io.*;

import java.util.Arrays;

@InternalAbility(registerTo = "com.harvey.hw.wear.MainAbility")

public class ServiceBridge {

private static final HiLogLabel LABEL_LOG = new HiLogLabel(3, 0xD001100, "ServiceBridge");

@ContextInject

AbilityContext abilityContext;

......

//样例:录音

/**

* 录音 - 启动

* @return

*/

public int startRecord() {

if(isRecording){

return 1;

}

if(abilityContext.verifySelfPermission("ohos.permission.MICROPHONE") == IBundleManager.PERMISSION_DENIED){

requestPermissions();

return 2;

}

HiLog.error(LABEL_LOG, "RecordServiceAbility::onStart");

init();

runRecord();

return 3;

}

/**

* 录音 - 停止

* @return

*/

public int stopRecord() {

if (isRecording && audioCapturer.stop()) {

audioCapturer.release();

}

isRecording = false;

return 1;

}

private AudioRenderer renderer;

private static boolean isPlaying = false;

/**

* 播放 - 停止

* @return

*/

public int stopPlay() {

if(isPlaying && renderer.stop()){

renderer.release();

}

isPlaying = false;

return 1;

}

/**

* 获取音频播放状态

* @return

*/

public boolean isPlaying(){

return isPlaying;

}

/**

* 播放 - 启动

* @return

*/

public int play() {

if(isPlaying){

return 1;

}

isPlaying = true;

String Path = "/data/data/"+abilityContext.getBundleName()+"/files/record.pcm";

File pcmFilePath = new File(Path);

if(!pcmFilePath.isFile() || !pcmFilePath.exists()){

isPlaying = false;

return 2;

}

new Thread(new Runnable() {

@Override

public void run() {

AudioStreamInfo audioStreamInfo = new AudioStreamInfo.Builder().sampleRate(SAMPLE_RATE)

.encodingFormat(ENCODING_FORMAT)

.channelMask(CHANNEL_OUT_MASK)

.streamUsage(AudioStreamInfo.StreamUsage.STREAM_USAGE_MEDIA)

.build();

AudioRendererInfo audioRendererInfo = new AudioRendererInfo.Builder().audioStreamInfo(audioStreamInfo)

.audioStreamOutputFlag(AudioRendererInfo.AudioStreamOutputFlag.AUDIO_STREAM_OUTPUT_FLAG_DIRECT_PCM)

.sessionID(AudioRendererInfo.SESSION_ID_UNSPECIFIED)

.bufferSizeInBytes(BUFFER_SIZE)

.isOffload(false)

.build();

renderer = new AudioRenderer(audioRendererInfo, AudioRenderer.PlayMode.MODE_STREAM);

AudioInterrupt audioInterrupt = new AudioInterrupt();

AudioManager audioManager = new AudioManager();

audioInterrupt.setStreamInfo(audioStreamInfo);

audioInterrupt.setInterruptListener(new AudioInterrupt.InterruptListener() {

@Override

public void onInterrupt(int type, int hint) {

if (type == AudioInterrupt.INTERRUPT_TYPE_BEGIN

&& hint == AudioInterrupt.INTERRUPT_HINT_PAUSE) {

renderer.pause();

} else if (type == AudioInterrupt.INTERRUPT_TYPE_BEGIN

&& hint == AudioInterrupt.INTERRUPT_HINT_NONE) {

} else if (type == AudioInterrupt.INTERRUPT_TYPE_END && (

hint == AudioInterrupt.INTERRUPT_HINT_NONE

|| hint == AudioInterrupt.INTERRUPT_HINT_RESUME)) {

renderer.start();

} else {

HiLog.error(LABEL_LOG, "unexpected type or hint");

}

}

});

audioManager.activateAudioInterrupt(audioInterrupt);

AudioDeviceDescriptor[] devices = AudioManager.getDevices(AudioDeviceDescriptor.DeviceFlag.INPUT_DEVICES_FLAG);

for(AudioDeviceDescriptor des:devices){

if(des.getType() == AudioDeviceDescriptor.DeviceType.SPEAKER){

renderer.setOutputDevice(des);

break;

}

}

renderer.setVolume(1.0f);

renderer.start();

BufferedInputStream bis1 = null;

try {

bis1 = new BufferedInputStream(new FileInputStream(pcmFilePath));

int minBufferSize = renderer.getMinBufferSize(SAMPLE_RATE, ENCODING_FORMAT,

CHANNEL_OUT_MASK);

byte[] buffers = new byte[minBufferSize];

while ((bis1.read(buffers)) != -1) {

if(isPlaying){

renderer.write(buffers, 0, buffers.length);

renderer.flush();

}

}

} catch (Exception e) {

e.printStackTrace();

} finally {

if (bis1 != null) {

try {

bis1.close();

} catch (IOException e) {

e.printStackTrace();

}

}

}

stopPlay();

}

}).start();

return 3;

}

private AudioCapturer audioCapturer;

private static final AudioStreamInfo.EncodingFormat ENCODING_FORMAT = AudioStreamInfo.EncodingFormat.ENCODING_PCM_16BIT;

private static final AudioStreamInfo.ChannelMask CHANNEL_IN_MASK = AudioStreamInfo.ChannelMask.CHANNEL_IN_STEREO;

private static final AudioStreamInfo.ChannelMask CHANNEL_OUT_MASK = AudioStreamInfo.ChannelMask.CHANNEL_OUT_STEREO;

private static final int SAMPLE_RATE = 16000;

private static final int BUFFER_SIZE = 1024;

private static boolean isRecording = false;

private void init() {

AudioDeviceDescriptor[] devices = AudioManager.getDevices(AudioDeviceDescriptor.DeviceFlag.INPUT_DEVICES_FLAG);

AudioDeviceDescriptor currentAudioType = null;

for(AudioDeviceDescriptor des:devices){

if(des.getType() == AudioDeviceDescriptor.DeviceType.MIC){

currentAudioType = des;

break;

}

}

AudioCapturerInfo.AudioInputSource source = AudioCapturerInfo.AudioInputSource.AUDIO_INPUT_SOURCE_MIC;

AudioStreamInfo audioStreamInfo = new AudioStreamInfo.Builder().audioStreamFlag(

AudioStreamInfo.AudioStreamFlag.AUDIO_STREAM_FLAG_AUDIBILITY_ENFORCED)

.encodingFormat(ENCODING_FORMAT)

.channelMask(CHANNEL_IN_MASK)

.streamUsage(AudioStreamInfo.StreamUsage.STREAM_USAGE_MEDIA)

.sampleRate(SAMPLE_RATE)

.build();

AudioCapturerInfo audioCapturerInfo = new AudioCapturerInfo.Builder().audioStreamInfo(audioStreamInfo)

.audioInputSource(source)

.build();

audioCapturer = new AudioCapturer(audioCapturerInfo, currentAudioType);

}

private void runRecord() {

isRecording = true;

new Thread(new Runnable() {

@Override

public void run() {

//启动录音

audioCapturer.start();

File file = new File("/data/data/"+abilityContext.getBundleName()+"/files/record.pcm");

if (file.isFile()) {

file.delete();

}

try (FileOutputStream outputStream = new FileOutputStream(file)) {

byte[] bytes = new byte[BUFFER_SIZE];

while (audioCapturer.read(bytes, 0, bytes.length) != -1) {

outputStream.write(bytes);

bytes = new byte[BUFFER_SIZE];

outputStream.flush();

if(!isRecording){

outputStream.close();

break;

}

}

} catch (IOException exception) {

HiLog.error(LABEL_LOG, "record exception," + exception.getMessage());

}

}

}).start();

}

private void requestPermissions() {

String[] permissions = {

"ohos.permission.MICROPHONE"

};

abilityContext.requestPermissionsFromUser(Arrays.stream(permissions)

.filter(permission - > abilityContext.verifySelfPermission(permission) != IBundleManager.PERMISSION_GRANTED).toArray(String[]::new), 0);

}

}

复制

第三,如何初始化ServiceBridge.java呢?创建一个中间文件,命名为ServiceBridgeStub.java。

public class MainAbility extends AceAbility {

@Override

public void onStart(Intent intent) {

ServiceBridgeStub.register(this);

......

}

......

}

复制

ServiceBridgeStub.java

package com.harvey.hw.wear;

import java.lang.Object;

import java.lang.String;

import java.lang.reflect.Field;

import java.util.HashMap;

import java.util.Map;

import ohos.ace.ability.AceInternalAbility;

import ohos.app.AbilityContext;

import ohos.rpc.IRemoteObject;

import ohos.rpc.MessageOption;

import ohos.rpc.MessageParcel;

import ohos.rpc.RemoteException;

import ohos.utils.zson.ZSONObject;

public class ServiceBridgeStub extends AceInternalAbility {

public static final String BUNDLE_NAME = "com.harvey.hw.wear";

public static final String ABILITY_NAME = "com.harvey.hw.wear.ServiceBridgeStub";

public static final int ERROR = -1;

public static final int SUCCESS = 0;

......

public static final int OPCODE_startRecord = 11;

public static final int OPCODE_stopRecord = 12;

public static final int OPCODE_stopPlay = 13;

public static final int OPCODE_isPlaying = 14;

public static final int OPCODE_play = 15;

private static ServiceBridgeStub instance;

private ServiceBridge service;

private AbilityContext abilityContext;

public ServiceBridgeStub() {

super(BUNDLE_NAME, ABILITY_NAME);

}

public boolean onRemoteRequest(int code, MessageParcel data, MessageParcel reply,

MessageOption option) {

Map< String, Object > result = new HashMap< String, Object >();

switch(code) {

......

case OPCODE_startRecord: {

java.lang.String zsonStr = data.readString();

ZSONObject zsonObject = ZSONObject.stringToZSON(zsonStr);

result.put("code", SUCCESS);

result.put("abilityResult", service.startRecord());

break;}

case OPCODE_stopRecord: {

java.lang.String zsonStr = data.readString();

ZSONObject zsonObject = ZSONObject.stringToZSON(zsonStr);

result.put("code", SUCCESS);

result.put("abilityResult", service.stopRecord());

break;}

case OPCODE_stopPlay: {

java.lang.String zsonStr = data.readString();

ZSONObject zsonObject = ZSONObject.stringToZSON(zsonStr);

result.put("code", SUCCESS);

result.put("abilityResult", service.stopPlay());

break;}

case OPCODE_isPlaying: {

java.lang.String zsonStr = data.readString();

ZSONObject zsonObject = ZSONObject.stringToZSON(zsonStr);

result.put("code", SUCCESS);

result.put("abilityResult", service.isPlaying());

break;}

case OPCODE_play: {

java.lang.String zsonStr = data.readString();

ZSONObject zsonObject = ZSONObject.stringToZSON(zsonStr);

result.put("code", SUCCESS);

result.put("abilityResult", service.play());

break;}

default: reply.writeString("Opcode is not defined!");

return false;

}

return sendResult(reply, result, option.getFlags() == MessageOption.TF_SYNC);

}

private boolean sendResult(MessageParcel reply, Map< String, Object > result, boolean isSync) {

if (isSync) {

reply.writeString(ZSONObject.toZSONString(result));

} else {

MessageParcel response = MessageParcel.obtain();

response.writeString(ZSONObject.toZSONString(result));

IRemoteObject remoteReply = reply.readRemoteObject();

try {

remoteReply.sendRequest(0, response, MessageParcel.obtain(), new MessageOption());

response.reclaim();

} catch (RemoteException exception) {

return false;

}

}

return true;

}

public static void register(AbilityContext abilityContext) {

instance = new ServiceBridgeStub();

instance.onRegister(abilityContext);

}

private void onRegister(AbilityContext abilityContext) {

this.abilityContext = abilityContext;

this.service = new ServiceBridge();

this.setInternalAbilityHandler(this::onRemoteRequest);

try {

Field field = ServiceBridge.class.getDeclaredField("abilityContext");

field.setAccessible(true);

field.set(this.service, abilityContext);

field.setAccessible(false);

} catch (NoSuchFieldException | IllegalAccessException e) {

ohos.hiviewdfx.HiLog.error(new ohos.hiviewdfx.HiLogLabel(0, 0, null), "context injection fail.");

}

}

public static void deregister() {

instance.onDeregister();

}

private void onDeregister() {

abilityContext = null;

this.setInternalAbilityHandler(null);

}

}

复制

最后,对自动生成js代码的功能,做编译配置

entry 主模块的 build.gradle 文件中添加如下代码

apply plugin: 'com.huawei.ohos.hap'

apply plugin: 'com.huawei.ohos.decctest'

ohos {

compileSdkVersion 6

defaultConfig {

compatibleSdkVersion 6

// 在文件头部定义JS模板代码生成路径

def jsOutputDir = project.file("src/main/js/default/generated").toString()

// 在ohos - > defaultConfig中设置JS模板代码生成路径

javaCompileOptions {

annotationProcessorOptions {

arguments = ["jsOutputDir": jsOutputDir] // JS模板代码生成赋值

}

}

}

......

compileOptions {

f2pautogenEnabled true // 此处为启用js2java-codegen工具的开关

}

}

......

复制

至此,关于穿戴应用研发的重点介绍已完成。

如果你还有兴趣,可以尝试实践一下BLE传输数据

结尾

这是去年研发穿戴应用的一个Demo应用工程结构

备注

看的HarmonyOS文档多了, 对于开发语言和文档中的描述容易产生混淆,这里附上一张简单的HarmonyOS 3.0 到 3.1版本的说明

- 相关推荐

- 热点推荐

- 移动开发

- 鸿蒙系统

- HarmonyOS

- OpenHarmony

- 鸿蒙OS

-

鸿蒙OS崛起,鸿蒙应用开发工程师成市场新宠2024-04-29 304

-

鸿蒙 OS 应用开发初体验2023-11-02 1034

-

RISC-V MCU开发实战 (三):移植鸿蒙OS项目2021-11-01 3797

-

华为开发者大会2021鸿蒙os在哪场2021-10-22 2378

-

鸿蒙os怎么升级2021-06-08 3912

-

鸿蒙OS与Lite OS的区别是什么2020-12-24 5931

-

华为发布鸿蒙OS Beta版2020-12-17 3482

-

鸿蒙OS 2.0手机开发者Beta版发布会在京举办2020-12-16 19265

-

华为鸿蒙OS 2.0带来哪些智慧体验?2020-12-15 2504

-

鸿蒙OS适用的全场景到底什么意思?2020-09-25 4000

-

鸿蒙OS应用程序开发2020-09-11 3304

-

初识鸿蒙OS2020-09-10 3208

-

华为鸿蒙OS又有吓人的设计 苹果的Carplay在鸿蒙OS面前真的自叹不如2019-08-27 9344

全部0条评论

快来发表一下你的评论吧 !