资料下载

警报和强迫自己起床的开源设备

描述

理念的起源

在过去的几年里,我很难在早上醒来。我尝试了许多解决方案,例如警报和强迫自己起床并开始行动,但都没有奏效。我决定想出一个解决方案来解决所有问题,如果我在闹钟响起 5 分钟后没有起床,这个设备会在我头上放一个枕头。

电子产品

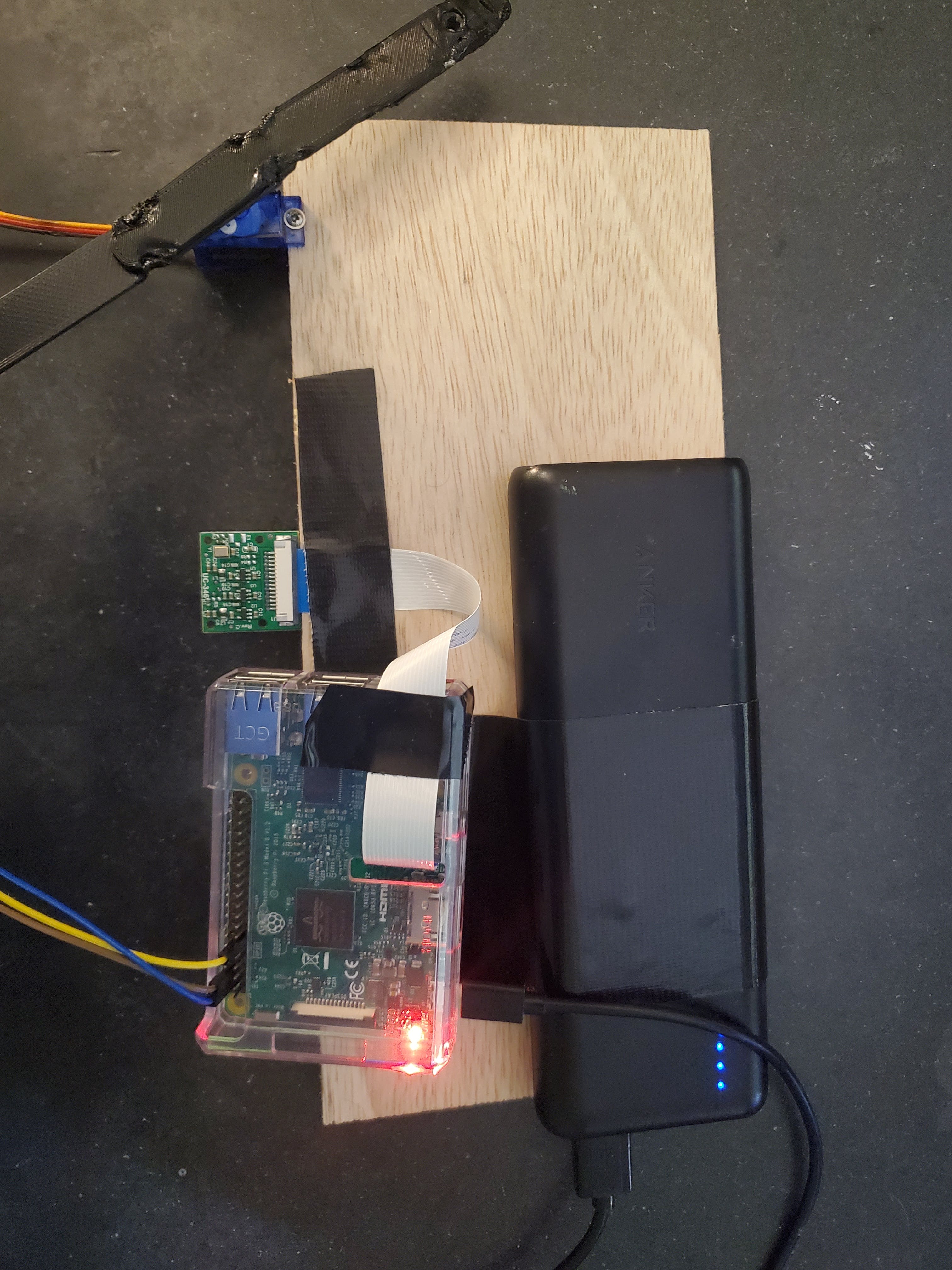

我使用的最终电子设备非常简单。它由一个 Raspberry Pi 3 B、一个 Raspberry Pi 相机、3 条公对母跳线、一个微型伺服器和一个电池组组成。

我将伺服引脚连接到树莓派上的引脚 4、6 和 11。我还将相机插入 Raspberry Pi 上的带状电缆的小插槽。

代码

在详细介绍我的项目的代码和面部识别部分之前,我想在 Youtube 上向cytrontech 大喊大叫,因为它发布了这个视频,展示了如何使用 Opencv 进行基本的面部识别。

在我开始使用我的 Raspberry Pi 之前,我确保安装了最新版本的 Raspberry Pi OS 的新映像。然后我开始下载opencv以便开始处理图像。一旦我确认我已经下载了 opencv 并且完全是最新的,我就开始浏览 cytrontech 视频。

代码部分由四个文件组成,其中两个与原始视频中的相同。

import cv2

name = 'Suad' #replace with your name

cam = cv2.VideoCapture(0)

cv2.namedWindow("press space to take a photo", cv2.WINDOW_NORMAL)

cv2.resizeWindow("press space to take a photo", 500, 300)

img_counter = 0

while True:

ret, frame = cam.read()

if not ret:

print("failed to grab frame")

break

cv2.imshow("press space to take a photo", frame)

k = cv2.waitKey(1)

if k%256 == 27:

# ESC pressed

print("Escape hit, closing…")

break

elif k%256 == 32:

# SPACE pressed

img_name = "dataset/"+ name +"/image_{}.jpg".format(img_counter)

cv2.imwrite(img_name, frame)

print("{} written!".format(img_name))

img_counter += 1

cam.release()

cv2.destroyAllWindows()

这是第一个名为 face_shot.py 的文件。它用于拍摄您的脸部照片并收集数据以训练模型。

#! /usr/bin/python

# import the necessary packages

from imutils import paths

import face_recognition

#import argparse

import pickle

import cv2

import os

# our images are located in the dataset folder

print("[INFO] start processing faces…")

imagePaths = list(paths.list_images("dataset"))

# initialize the list of known encodings and known names

knownEncodings = []

knownNames = []

# loop over the image paths

for (i, imagePath) in enumerate(imagePaths):

# extract the person name from the image path

print("[INFO] processing image {}/{}".format(i + 1,

len(imagePaths)))

name = imagePath.split(os.path.sep)[–2]

# load the input image and convert it from RGB (OpenCV ordering)

# to dlib ordering (RGB)

image = cv2.imread(imagePath)

rgb = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

# detect the (x, y)-coordinates of the bounding boxes

# corresponding to each face in the input image

boxes = face_recognition.face_locations(rgb,

model="hog")

# compute the facial embedding for the face

encodings = face_recognition.face_encodings(rgb, boxes)

# loop over the encodings

for encoding in encodings:

# add each encoding + name to our set of known names and

# encodings

knownEncodings.append(encoding)

knownNames.append(name)

# dump the facial encodings + names to disk

print("[INFO] serializing encodings…")

data = {"encodings": knownEncodings, "names": knownNames}

f = open("encodings.pickle", "wb")

f.write(pickle.dumps(data))

f.close()

这是名为 train_model.py 的第二个文件。它用于根据您使用 face_shot.py 拍摄的图像来训练模型。

#! /usr/bin/python

# import the necessary packages

from datetime import datetime

import servo_move

from imutils.video import VideoStream

from imutils.video import FPS

import face_recognition

import imutils

import pickle

import time

import cv2

now = datetime.now()

da_time = datetime(2021, 4, 7, 12, 35, 00)

x = 0

#Initialize 'currentname' to trigger only when a new person is identified.

currentname = "unknown"

#Determine faces from encodings.pickle file model created from train_model.py

encodingsP = "encodings.pickle"

#use this xml file

#https://github.com/opencv/opencv/blob/master/data/haarcascades/haarcascade_frontalface_default.xml

cascade = "haarcascade_frontalface_default.xml"

# load the known faces and embeddings along with OpenCV's Haar

# cascade for face detection

print("[INFO] loading encodings + face detector…")

data = pickle.loads(open(encodingsP, "rb").read())

detector = cv2.CascadeClassifier(cascade)

# initialize the video stream and allow the camera sensor to warm up

print("[INFO] starting video stream…")

vs = VideoStream(src=0).start()

#vs = VideoStream(usePiCamera=True).start()

time.sleep(2.0)

# start the FPS counter

fps = FPS().start()

# loop over frames from the video file stream

while True:

# grab the frame from the threaded video stream and resize it

# to 500px (to speedup processing)

frame = vs.read()

frame = imutils.resize(frame, width=500)

# convert the input frame from (1) BGR to grayscale (for face

# detection) and (2) from BGR to RGB (for face recognition)

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# detect faces in the grayscale frame

rects = detector.detectMultiScale(gray, scaleFactor=1.1,

minNeighbors=5, minSize=(30, 30),

flags=cv2.CASCADE_SCALE_IMAGE)

# OpenCV returns bounding box coordinates in (x, y, w, h) order

# but we need them in (top, right, bottom, left) order, so we

# need to do a bit of reordering

boxes = [(y, x + w, y + h, x) for (x, y, w, h) in rects]

# compute the facial embeddings for each face bounding box

encodings = face_recognition.face_encodings(rgb, boxes)

names = []

# loop over the facial embeddings

for encoding in encodings:

# attempt to match each face in the input image to our known

# encodings

matches = face_recognition.compare_faces(data["encodings"],

encoding)

name = "Unknown" #if face is not recognized, then print Unknown

# check to see if we have found a match

if True in matches:

# find the indexes of all matched faces then initialize a

# dictionary to count the total number of times each face

# was matched

matchedIdxs = [i for (i, b) in enumerate(matches) if b]

counts = {}

# loop over the matched indexes and maintain a count for

# each recognized face face

for i in matchedIdxs:

name = data["names"][i]

counts[name] = counts.get(name, 0) + 1

# determine the recognized face with the largest number

# of votes (note: in the event of an unlikely tie Python

# will select first entry in the dictionary)

name = max(counts, key=counts.get)

#If someone in your dataset is identified, print their name on the screen

if currentname != name:

currentname = name

print(currentname)

# update the list of names

names.append(name)

# loop over the recognized faces

for ((top, right, bottom, left), name) in zip(boxes, names):

# draw the predicted face name on the image – color is in BGR

cv2.rectangle(frame, (left, top), (right, bottom),

(0, 255, 0), 2)

y = top - 15 if top - 15 > 15 else top + 15

cv2.putText(frame, name, (left, y), cv2.FONT_HERSHEY_SIMPLEX,

.8, (255, 0, 0), 2)

# display the image to our screen

cv2.imshow("Facial Recognition is Running", frame)

key = cv2.waitKey(1) & 0xFF

# quit when 'q' key is pressed

if key == ord("q"):

break

# update the FPS counter

fps.update()

current_time = datetime.now()

if (currentname == "will") and (current_time.time() > da_time.time()) and (x == 0):

exec(open("servo_move.py").read())

x = 1

# stop the timer and display FPS information

fps.stop()

print("[INFO] elasped time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

这是视频中名为 face_rec.py 的第三个也是最后一个文件。它是您想要实际启动面部识别软件时运行的文件。我只添加了几行代码,它们是:

from datetime import datetime

import servo_move

now = datetime.now()

da_time = datetime(2021, 4, 7, 12, 35, 00)

x = 0

current_time = datetime.now()

if (currentname == "will") and (current_time.time() > da_time.time()) and (x = = 0):

exec(open("servo_move.py").read())

x = 1

这些代码行检查当前时间是否为上午 7:35,即闹钟响后 5 分钟。如果是并且我的脸在那里,那么它会执行一个名为servo_move.py 的文件。

import RPi.GPIO as GPIO

import time

GPIO.setmode(GPIO.BOARD)

GPIO.setup(11,GPIO.OUT)

servo1 = GPIO.PWM(11,50)

servo1.start(0)

servo1.ChangeDutyCycle(12)

time.sleep(2)

servo1.ChangeDutyCycle(2)

time.sleep(0.5)

servo1.ChangeDutyCycle(0)

servo1.stop()

GPIO.cleanup()

这是servo_move.py。它使伺服器移动 180 度然后向后移动。

制造

我必须制作的第一件作品是在我的电子部分展示的“电子板”。它只是一块木头,一切都依赖于它。

这是一个非常简单的设计,只是一些木头与我 3D 打印的一些铰链相连。我想说铰链不是我自己设计的,它们是guppyk在 thingiverse 上制作的。我使用的铰链和它们的许多变体可以在这里下载。

我会做什么不同

这个项目最终确实按预期工作,但这并不意味着我不会改变某些方面。如果我再做一次,我会把木头喷漆成黑色,这样胶带和零件就不会那么突出了。我也会制作一个更永久的电子板版本。

声明:本文内容及配图由入驻作者撰写或者入驻合作网站授权转载。文章观点仅代表作者本人,不代表电子发烧友网立场。文章及其配图仅供工程师学习之用,如有内容侵权或者其他违规问题,请联系本站处理。 举报投诉

- 相关下载

- 相关文章